Setting Up Phi3 LLM Locally with LMStudio

Ready to supercharge your AI projects with Phi3 LLM?

No more latency, no more internet worries and no more data privacy concerns!!

Introduction

Recently I was travelling and just like any other college student with assignments due for submission with the deadline closing in an hour, I chose to swallow my pride and open ChatGPT and get some help.

But the thing is I faced was persistent internet issues on the road and almost gave up on the assignment. I wish I could have submitted the assignment (I did and I scored pretty well :)

The same day I searched and found a way to help me summarize PDFs and also help me topics research for writing some assignments and that too offline.

Now since you know the motivation behind the lookout. I found a tool which can run LLMs locally on your device i.e. completely offline!!!

This image is AI generated

But the thing with running a LLM locally is that you need some decent hardware in your device to run and chat with it.

Looking at my laptop’s specs I again lost hope. But then I remembered something about a small LLM recently launched by Microsoft which they pitches would even run on mobile devices.

Sooo………

Have you heard about Phi-3?

PLEASE SAY NO….. PLEASE SAY NO…….

Oh really! you haven’t??

Let me tell you about them 😊 (Honestly I wanted to tell you guys)

This image is AI generated

What is Phi-3?

Phi-3 is a family of lightweight LLMs or as some folks like to say ‘SLMs’ or ‘Small Language Model’ , launched by Microsoft recently which comprises of these models:

- Phi-3 Medium (14B parameters)

- Phi-3 Small (7B parameters)

- Phi-3 Mini (3.8B parameters)

Phi-3 Mini is one of the most sophisticated language models on the market, it produces and understands text with astounding accuracy.

What’s so fascinating about Phi-3?

Well what’s really exciting is that you can run Phi-3-mini (which measures 3.8 billion parameters) directly on your own machine, making it incredibly accessible and convenient.

This image is AI generated

In addition to the 4,000-token context window found in Phi-3-mini, Microsoft also released “phi-3-mini-128K,” a version with 128K tokens. Microsoft also developed Phi-3 versions with 7 billion and 14 billion parameters, which it intends to release later and which is said to “significantly more capable” than Phi-3-mini.

Well if you ask me there are some pretty awesome benefits to running Phi-3 LLM locally.

- Enhanced Privacy and Security: Local deployment lowers the risk of data breaches because it gives you complete control over your data and allows you to make sure it’s not sent to external servers.

- Offline Capabilities: For offline or edge computing scenarios, Phi3 LLM is ideal because it can be used locally and even without an internet connection.

- Better Integration: Workflows and procedures can be more smoothly integrated with Phi3 LLM thanks to its easier integration with other local tools and systems.

Now we have the LLM that we can use anytime with us on our machine locally.

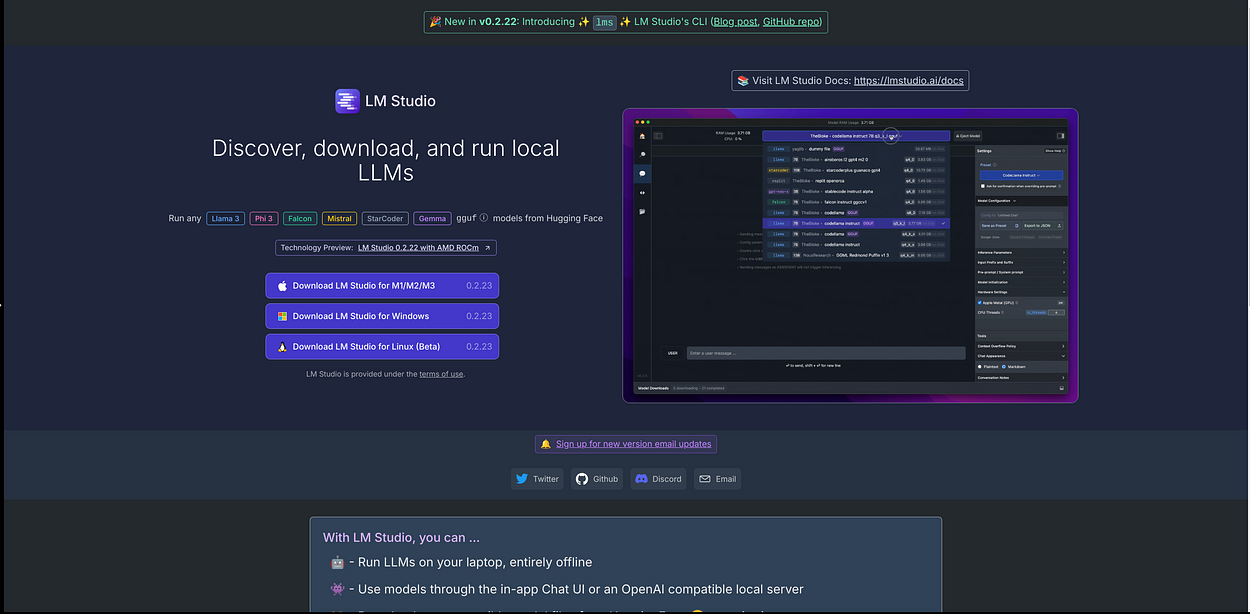

What is LMStudio?

It is a tool which we can install on our local machine to work with any LLM that we want on our own devices locally and completely offline i.e. without relying on the cloud-based services.

Some features this tool provides are:

- Discover and download compatible LLMs

- Run models locally offline

- Chat UI for testing and experimentation

- Local server for OpenAI compatibility

- Supports Llama ,Mistral and StarCoder models from Hugging Face

By hosting the model locally and creating a local server, we can pair LM Studio with AnythingLLM. The local server also comes with OpenAI compatibility, which makes it easy to integrate with other tools and services that use OpenAI’s API.

If you had read any of my other articles you know I have a thing for the open source community. This tool is also open source and free to use for anyone.

Credits: LMStudio

Now I have a mid-range laptop which can run Phi-3-Mini and I also know of a tool which can help run the LLM on my machine with a decent GUI.

I have been using LM Studio on a Linux-based distro and it has been smooth so far.

Prerequisites

For using the LLM locally and LM Studio you need to a have device which fulfills the following requirements:

- At least 16GB of RAM

- NVIDIA/AMD GPUs support

- macOS 13.6 or newer (for Apple Silicon devices)

- A compatible Python environment (e.g., Python 3.8 or higher) with the necessary dependencies installed.

As per their website LM Studio is compatible with the following operating systems:

- macOS (M1/M2/M3)

- Windows

- Linux (beta)

Setting up LM Studio

Now since we know the motivation behind running an offline LLM and have the means to do so , let’s install the main thing now!!!

- The first step is to install LM Studio from their official website

- Once you open the website you’ll see something as shown above. You have to download the files as per your operating system and the available options mentioned on their website.

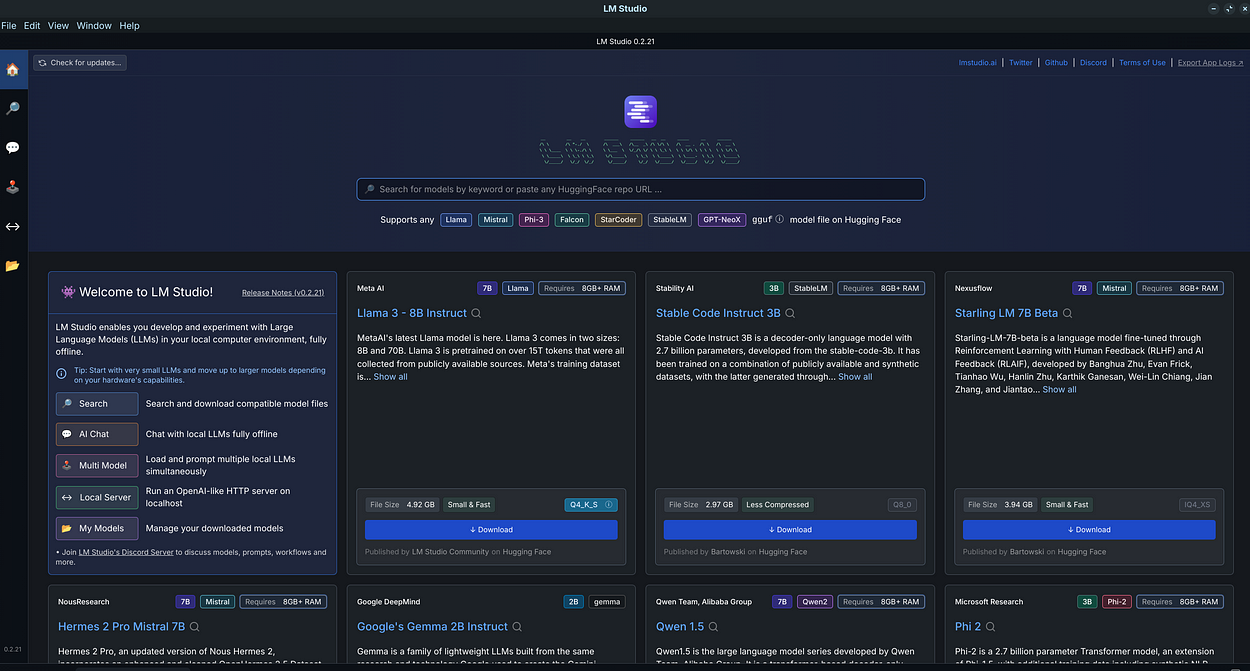

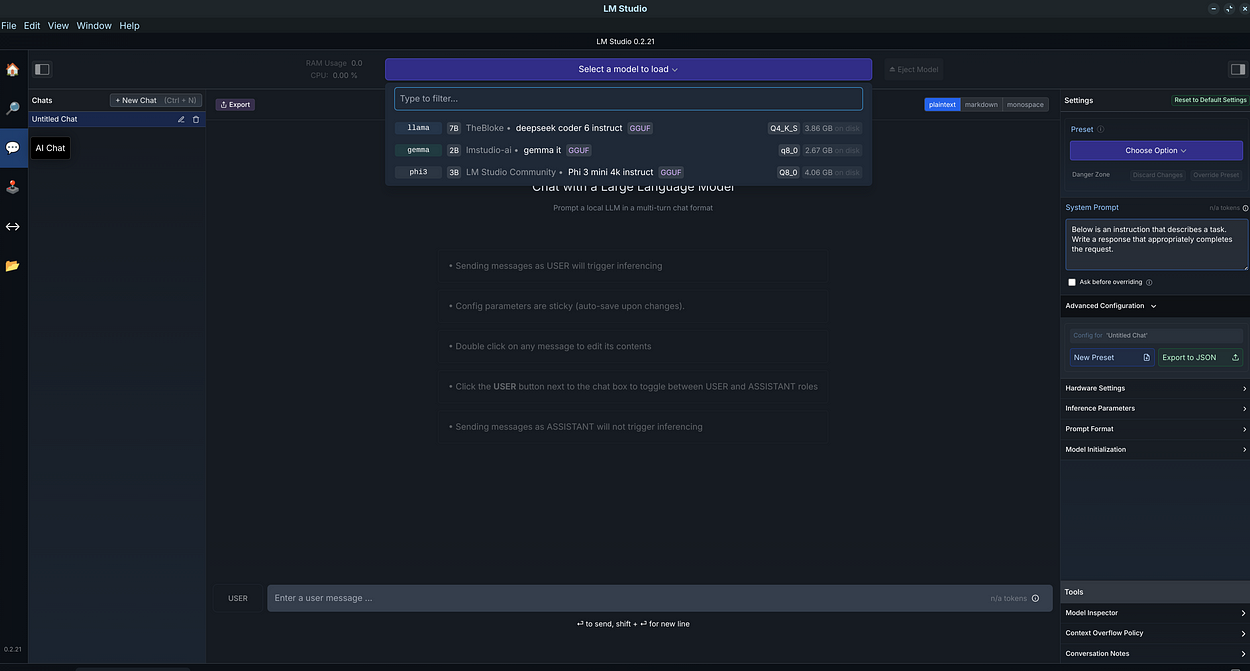

- After downloading and installing LM Studio we can open it and once we do we’ll see something like this.

Here we can see there are tons of models available to be downloaded and run on our machine.

Now let me tell you about some major functional features of this tool.

- Search : We can search most of the available models on hugging face and download them on our local machine to run.

- AI Chat : This is basically a feature allows us to chat with the LLMs completely offline with a GUI instead of a CLI.

- Multi Model : Using this we can chat with multiple LLM’s simultaneously. (this one is one of my favourite features)

- Local Server : This allows you to host a OpenAI like HTTP server on your localhost.

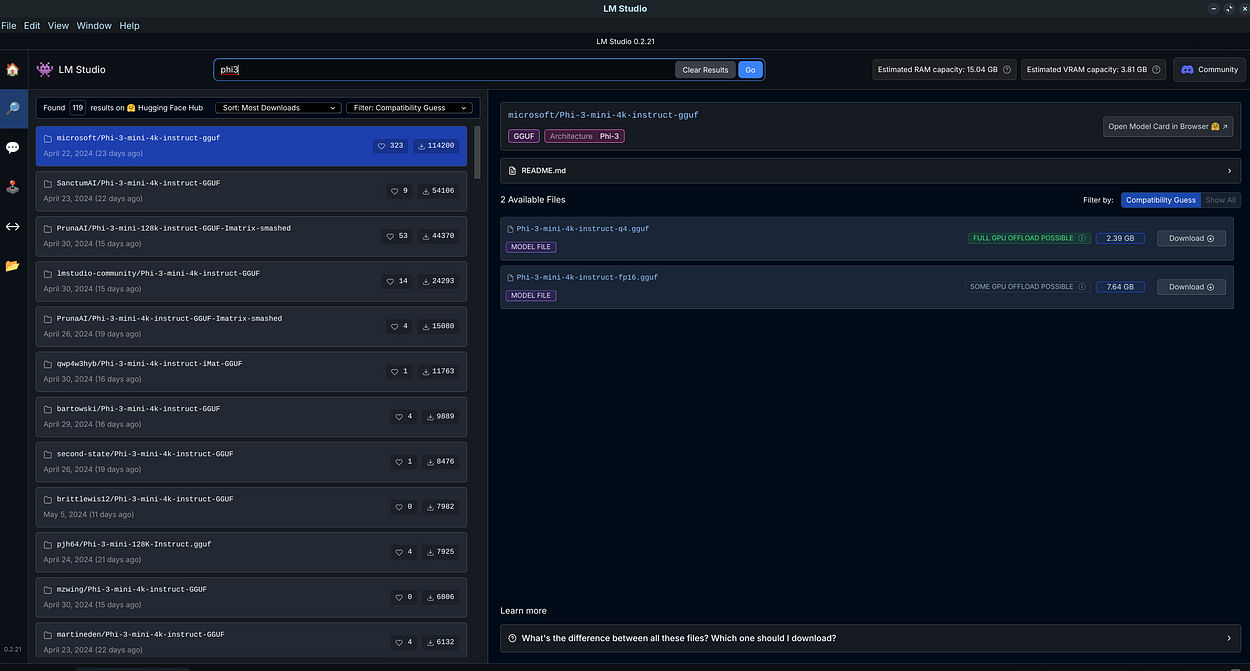

Configuring Phi-3

Now once we have installed LM Studio we have to download the Phi-3 model on own machine.

For this we need to either find it on the home page or search it inside the search bar on the home page.

We can also download the same model from any other space from hugging face.

Now click on the download button near the model name and wait for it to download.

Once downloaded we have to load the model to the chat interface.

Click on the AI Chat -> Select a model to load -> You should see all the models which are downloaded on your device -> Select Phi-3

Now wait for some time so that the model can be loaded.

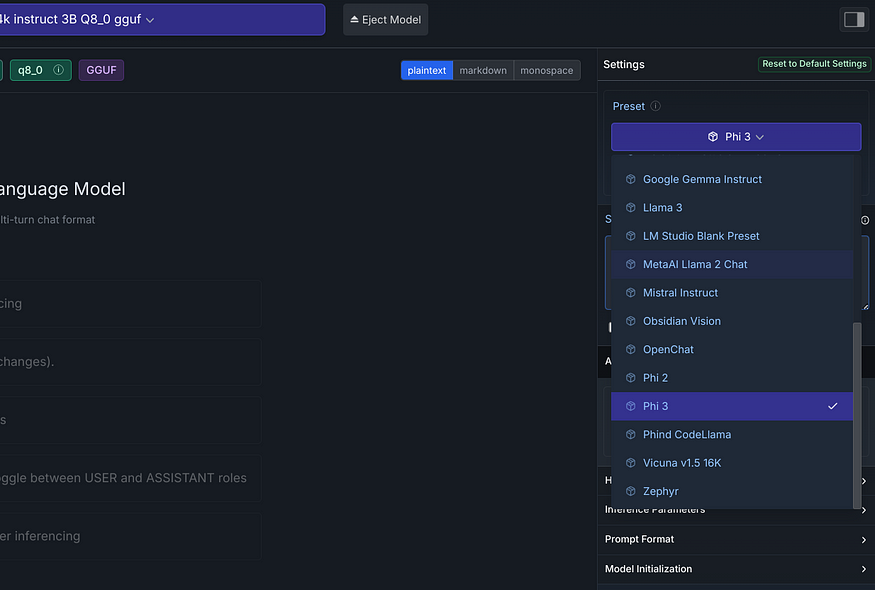

Once the model is loaded:

Click on the preset option -> Select the model preset for the model which you loaded -> We will select Phi-3 in our case .

Now once the presets are loaded we are good to go now and we can chat with the LLM on our local machine.

Congratulations!

We’ve covered the basics of setting up Phi3 LLM locally using LMStudio, and explored the benefits of taking your language model game to the next level.

Whether you’re a developer, researcher, or simply an AI enthusiast, I hope this article has inspired you to explore the possibilities of local language models.

Here are some other articles that I wrote:

So that’ll be all for today!

Hope to see you around for the next one!

Namaste 🙏

In the event that you enjoyed reading this article, make sure to follow for more.

POSTS ACROSS THE NETWORK

Sanity: A Modern Content Operating System for the AI Era

AI Fire Risk Assessment Software: Advancing Intelligent Fire Risk Management

How to Turn Code Snippets Into High Retention Flashcards

When Automation Replaces Instinct